This is default featured slide 1 title

Go to Blogger edit html and find these sentences.Now replace these sentences with your own descriptions.

This is default featured slide 2 title

Go to Blogger edit html and find these sentences.Now replace these sentences with your own descriptions.

This is default featured slide 3 title

Go to Blogger edit html and find these sentences.Now replace these sentences with your own descriptions.

This is default featured slide 4 title

Go to Blogger edit html and find these sentences.Now replace these sentences with your own descriptions.

This is default featured slide 5 title

Go to Blogger edit html and find these sentences.Now replace these sentences with your own descriptions.

Sunday, 19 April 2009

11 G New Features

Saturday, 11 April 2009

Informatica Metadata Queries (Part 3)

Saturday, 4 April 2009

Informatica Strange Problems

Error : Informatica Debugger: Invalid session can not be debugged .Select a valid session instance to use

Problem : You are trying to run your mapping in debug mode by selecting valid session but you are getting below error even though your session is valid.

Solution :

1) Copy your mapping Suppose M1 as M1_Debug

2) Make your session as reusable

3) Making your Initial Mapping M1 as invalid by someway

4) Now refresh your session it will ask for new mapping then select M1_debug

5) Revalidate your session

6) Try to run your mapping(M1_debug) using second option use existing reusable instance for mapping

Now you should be able to run your mapping in debugger mode

Though it not a 100% correct method but it worked in my case but it is worth trying

Error : CMN_1892 Error: Data for Lookup [] fetched from the file [] is not sorted on the condition ports

Though it not a 100% correct method but it worked in my case but it is worth trying

Please not both of these issues we encountered on Informatica 7.1.1

Thursday, 2 April 2009

How to validate all mappings in a particular folder

In this post we will discuss how to validate all mapping in a particular folder

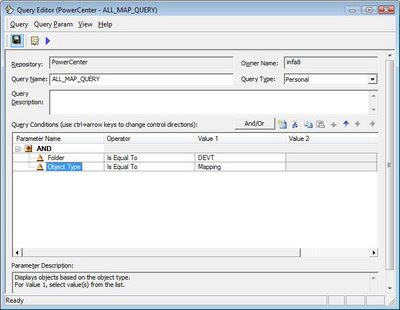

Step1 : Go to the Repository manager client

Step2 :

Go to option Tools->Queries

Create a new query with name ALL_MAP_QUERY

Create a query with parameter name and condition to retrieve the mappings in a folder

as the following:

folder = folder-name

object type = mapping

Step 3:

a) Connect to power center repository using pmrep

pmrep connect -r RepositoryName -d DomainName -n UserId -x Passwd

b) Create a Persistent output file using command

pmrep executequery -q ALL_MAP_QUERY -u d:\infa8\PERS_OP_FILE

Step 4 :Use the persistent output file created in last step to validate mappings

pmrep validate -i d:\infa8\PERS_OP_FILE -u PERS_OP_FILE_LOG

Sunday, 22 March 2009

Informatica Useful Tips (Part1)

It is possible by defining a mapping parameter for the WHERE clause of the SQL Override. When you need all records from the source, define this parameter as 1=1 in theparameter file and in case you need only selected data, set the parameter accordingly.

Tip 2 : Overcome size limit for a SQL Override in a PowerCenter mapping

The SQL editor for SQL query overrides has a limit of maximum of 32,767 characters.

To overcome this we can do following

To source a SQL of more than 32,767 characters do the following:

1. Create a database view using the SQL query override.

2. Create a Source Definition based on this database view.

3. Use this new Source Definition as the source in the mapping

Tip 3. :Export an entire Informatica folder to a xml file

We can do this in 8.1.1,

1) In designer Select Tools -> Queries and create a newquery. Set the Parameter Name "Folder" equal to the Folder you want to export and then run the query.

2) In the Query Results window, choose Edit -> Select All Then select Tools -> Export to XML File andenter a file name and location. Full Folder willbe exported to an XML file.

We can also use the query tool in Repository Manager, to geteverything in the folder (mappings, sessions, workflows, etc.)

Tip 4 : Validate all mappings in a folder

We can validate all mappings in a folder in following way:

1. Go to the Repository manager client

2. Create a query with parameter name and condition to retrieve the mappings in a folder

as the following:

folder = folder-name

object type = mapping

Use the following Pmrep Execute query command to get persistent output file:

executequery -q

We can write the result to a persistent output file.If the query is successful, it returns the total number of qualifying records.We can use newly created persistent output file as a input file in the following pmrep validate command :

Pmrep validate-i

Tip 5 : If you are getting following error

CMN_1022 [

[Microsoft][ODBC Driver Manager] Driver's SQLSetConnectAttr failed[Microsoft][ODBC Excel Driver]Optional feature not implemented

Database driver error...

Function Name : SetConnectOption

Database driver error...

Function Name : AutoCommitOff]

Solution :to make an entry of excel ODBC in powermart.ini file in informatica folder

If you need some more tips please mail us at support@itnirvanas.com.My Profile

Wednesday, 18 March 2009

Informatica Metadata Queries (Part 2)

Purpose : To Find Tracing Level for Session

Query :

select task_name,decode (attr_value,0,'None',1,'Terse',2,'Normal',3,'Verbose Initialisation',4,'Verbose Data','') Tracing_Level

from REP_SESS_CONFIG_PARM CFG,opb_task TSK

WHERE CFG.SESSION_ID=TSK.TASK_ID

and tsk.TASK_TYPE=68

and attr_id=204 and attr_type=6

Description : This query will give tracing information along with session names.This query is helpful in identifying the session which are having particular type of Tracing level like Verbose.

Purpose : To Find name of all stored procedure being used in stored procedure transformation

Query :

select attr_value from

OPB_WIDGET_ATTR

where widget_type=6 and attr_id=1

Description : This query is helpful when you require to know name of all stored procedure being used in informatica.

Purpose : To find who saved mapping last time

Query :

SELECT substr(rpl.event_time,7,4) substr(rpl.event_time,6,1) substr(rpl.event_time,1,5) ' ' substr(rpl.event_time,12,11) "EventTimestamp" ,

usr.user_name "Username",

DECODE(rpl.object_type_id,21,s21.subj_name,('('rpl.object_type_id')')) "Folder",

obt.object_type_name "Type",

DECODE(rpl.object_type_id,21,map.mapping_name,('('rpl.object_type_id')')) "Object"

FROM

opb_reposit_log rpl,opb_object_type obt,

opb_subject fld,opb_mapping map,opb_users usr,opb_subject s21

WHERE obt.object_type_name = 'Mapping'

AND rpl.object_type_id = obt.object_type_id

AND rpl.object_id = map.mapping_id(+)

AND rpl.object_id = fld.subj_id(+)

AND rpl.event_uid = usr.user_id

AND map.subject_id = s21.subj_id(+)

ORDER BY(substr(rpl.event_time,7,4) substr(rpl.event_time,6,1)

substr(rpl.event_time,1,5) ' ' substr(rpl.event_time,12,11)) DESC

Description : This query is helpful when you want to know who saved the mapping last time .

If you need some information and need query about something different related to metadata query please mail us at support@itnirvanas.com.My Profile

Sunday, 22 February 2009

Unix Interview Questions (Part1)

Wednesday, 18 February 2009

Informatica Metadata Queries (Part1)

Sunday, 15 February 2009

Oracle Deferred Constraints

Recently we came across a unique situation of updating a primary key of master table which being referenced by child table.Problem with updating primary key of master table was that it would have made child table orphan (ora-02292 child found)and we could not even update child table as it would have violated referential integrity.

To overcome this we used the deferrable constraint introduced by oracle.A deferrable constraint is only checked at transaction commit time.We altered the foreign key on child table to make constraint deferrable .After altering the foreign key constraint on child table we were able to update master table primary key with new values as constraint checking was deferred till commit point.

Now we will explain the deferrable constraints in detail.

Constraint are of three types

1) Non deferrable (Check constraint at time of commit and can not be deferred)

2) Deferrable-Initially immediate (Check constraint at time of statement execution )

3) Deferrable-Initially deferred (Check constraint at time of commit)

SQL> create table test_def (a varchar2(1) constraint chk_a check ( a in ('X','Y','Z') )

2 deferrable

3* initially immediate);

Table created.

SQL> insert into test_def values ('C');insert into test_def values ('C');

*ERROR at line 1:ORA-02290: check constraint (ITN.CHK_A) violated

Error as constraint is being checked at time of statement execution. Now we will change constraint to initially deferred.

SQL> alter table test_def modify constraint chk_a initially deferred;

Table altered.

SQL> insert into test_def values ('C');

1 row created.

SQL> commit ;

*ERROR at line 1:ORA-02091: transaction rolled back ORA-02290: check constraint (ITN.CHK_A) violated

As constraint is initially deferred it is being checked at commit type.

When should we use deferrable constraint :

Deferred integrity constraints should only be used when absolutely necessary but following are some scenario where deferrable constraint are used

1) In case of inserting large amounts of data into a table in a data warehousing environment lot of time can be saved.

2) Design issues (Updating Primary Key of Parent table in Parent Child table scenario).

Which constraints are deferrable? Only constraint created with the deferrable option can be deferred.by default constraints created are non-deferrable.

How to check constraint is deferrable ?

SELECT constraint_name , deferrable , deferred

FROM user_constraints

WHERE constraint_name like 'test_def%';

This article will not be complete without mention of option No Validate with constraints.

No Validate option with constraint : No Validate option will direct Oracle to enable the constraint but not to check existing data for constraint violation.This is particular useful where we have existing data that violates the constraint but urgent business requirement to enable constraints to avoid any future data with constraint violation.

If you have any question please contact us at support@ITNirvanas.comMy Profile

Wednesday, 11 February 2009

Informatica interview questions (Part 3)

Q 1 What is the use of Shared Folder?

Ans : Shared folders allow users to create shortcuts to objects in the folder.If you have an object that you want to use in several mappings or across multiple folders, we can place the object in a shared folder.we can then access the object from other folders by creating a shortcut to the object. Shortcuts inherit changes to their shared object. Shared folders in global repositories can be used by any folder in the domainOnce you make a folder shared, you cannot reverse it.

Q2 What are the different ways to migrate from one environment to another in Informatica

Ans

1.We can export repository and import into the new environment

2. We can use informatica deployment groups

3. We can Copy folders/objects

4. We can Export each mapping to xml and import in new environment

Q3 What is difference between mapping parameter and variable

Ans A Mapping parameter is a static value that you define before running the session and it value remains till the end of the session.when we run the session PowerCenter evaluates the value from the parameter and retains the same value throughout the session. When the session run again it reads from the file for its value.

A Mapping variable is dynamic or changes anytime during the session. PowerCenter reads the intial value of the variable before the start of the session and changes its value by using variable functions and before ending the session its saves the current value (last value held by the variable). Next time when the session runs the variable value is the last saved value in the previous session.

Q4 What is DTM

Ans DTM (Data Transformation Manager) is the process associated with the session taskThe Load Manager creates one DTM process for each session in the workflow. The DTM process performs the following tasks:

1.Reads session information from the repository.

2.Expands the server, session, and mapping variables and parameters.

3.Creates the session log file. Validates source and target code pages.

4.Verifies connection object permissions.

5.Runs pre-session shell commands, stored procedures and SQL.

6.Creates and runs mapping, reader, writer, and transformation threads to extract, transform, and load data.

7. Runs post-session stored procedures, SQL, and shell commands. Sends post-session email.

Q5 How to delete duplicate record in Informatica

Ans : Following are ways to remove duplicate records

1. In source qualifier use select distinct

2. Use Aggregator and group by all fields

3. Override SQL query in Source qualifier

Q6 What are different type of repositories that can be created using Informatica Repository Manager?

Ans

1. Standalone Repository : A repository which functions individually and is unrelated to any other repositories.

2. Global Repository : This is a centralized repository in a domain. This repository can contain shared objects across the repositories in a domain. The objects are shared through global shortcuts.

3. Local Repository : Local repository is within a domain . Local repository can connect to a global repository using global shortcuts and can use objects in it’s shared folders.

Q 7 How to find all invalid mappings in a folder

Ans Use following query

SELECT MAPPING_NAME FROM REP_ALL_MAPPINGS WHERE SUBJECT_AREA='YOUR_FOLDER_NAME' AND PARENT_MAPPING_IS_VALIED <>1

Q 8 What are the data movement modes in informatica?

Ans Data movement modes determines how power center server handles the character data. We choose the data movement in the informatica server configuration settings. Two types of data movement modes available in informatica.

1.ASCII mode

2.Unicode mode.

Q9 What are Limitations on joiner transformation ?

Ans

1.Both pipelines begin with the same original data source.

2.Both input pipelines originate from the same Source Qualifier transformation.

3.Both input pipelines originate from the same Normalizer transformation.

4.Both input pipelines originate from the same Joiner transformation.

5.Either input pipelines contains an Update Strategy transformation.

6.Either input pipelines contains a Sequence Generator transformation.

Q10 What will happen if we connect only current value port from seq generator to next transformation (without connecting nextval)

Ans Each target will get the value 1.

If you have any doubt or need more questions please mail us at support@itnirvanas.com

Saturday, 7 February 2009

Kimball Vs inmon

Bill Inmon Approach : According to Bill Inmon Data warehouse need to fulfill need of all category of users .In an organization there are different type of user like

· Marketing

· Supply Change Management

· Operations

Each department has its different way of interpreting data so Data warehouse should be able to answer each department queries. This can be achieved by designing tables in 3NF form. According to him data in Datawarehouse should be in 3NF and lowest granularity level. The data should be accessible at detailed atomic levels by drilling down or at summarized levels by drilling up.

He stressed that data should be organized into subject oriented, integrated, non volatile and time variant structures. According to him an organization have one Data warehouse and Data mart source there information from Data warehouse. Inmon Approach is also called Top Down approach .

In this methodology data is brought into staging area from OLTP system or ODS (Operational Data store) and then summarized and aggregated. After this process data mart will source their data from data warehouse and will apply new set of transformation and aggregation according to their need.

In this methodology data is brought into staging area from OLTP system or ODS (Operational Data store) and then summarized and aggregated. After this process data mart will source their data from data warehouse and will apply new set of transformation and aggregation according to their need.Key points to be noted about this approach

2.Data in 3rd Normalization form

3.Top to down approach

2. Well integrated

Difficult to implement

Ralph Kimball Approach :

In this approach data is always stored in dimensional model.

2. Bottoms Approach

3. Data ware house is Virtual

4. Bottoms's up approach

Fast to build

Cons of Ralph Kimball approach

Difficult to maintain because of redundancy of data across data marts

Tuesday, 3 February 2009

Best Practices in Informatica (Part1)

1) Always try to add expression transformation after source qualifier and before Target. If source or target definition changes it is easier to reconnect the ports

2) Always use Cobol File for normaliser in binary format otherwise there are lot of issues specially with comp-3 fields

3) Remove unused ports, though unused ports do not have any effect on performance bit it is always better to remove them for more visibility

4) If possible try to do calculation in Output Ports instead of variable ports as variable ports are need to assign/reassign each time and it can slow down the performance

5) Try to avoid complex filter expression instead of that try to evaluate filter expression in upstream expression transformation and pass it to filter transformation. If you use too many complex calculations in filter condition expression it can slow down performance.

6) In workflow Source/Target directory Property take advantage of Unix links. Instead of hard coding path in source/target directory specify path with Unix link

i.e. suppose in devt environment you are specifying Source directory path as /devserver/team/source and in prod server you specify it as /prodserver/team/source .You can get link created in $PMRootDir in as src_file_dir pointing to /devserver/team/source in dev server and /prodserver/team/source in prod server and in your source/Target file directory you can put path as $PMRootDir/src_file_dir In this case there is no need to change Source/Target directory every time you move between production and dev and testing

7) In sequence generator do not connect current value port to downstream (unless required) transformation as when we connect current value port from sequence generator transformation Informatica Server processes one row in each block. We can optimize performance by connecting only the NEXTVAL port in a mapping .

8) Improve lookup performance by putting all conditions that use the equality operator ‘=’ first in the list of conditions under the condition tab.

9) Always remember rule not to cache look tables having more than 550000 rows (Assuming row size 1024) .If your row size is less than or more than 1024 then adjust number of rows accordingly .

10) Avoid calculating same value again and again. Instead of that store it in a variable use it several times.

If you have further queries then please mail to support@itnirvanas.com

Monday, 2 February 2009

Unix Script to Automate FTP Process

Normally we come across the situation when we have to FTP file daily.Doing this process daily for large no of files can be cumbersome.We can create Unix script to automate FTP Process

Currently we need to last day files from production to dev box for testing,so we created below mentioned script to FTP files daily

b=`TZ=CST+24 date +%y%m%d` ###To get last date

cd /export/home/mydir

ftp -v -n FTP_HOST_NAME EOF ##Please put two less than sign before EOF

user Userid Pwd

bin

cd /export/home/source_dir

get FILE_NAME.`echo $b`

bye

EOF

Please note replace FTP_HOST_NAME,Userid,Pwd with your actual userid,password and ftp host name.

Please put two less than sign before EOF in third line of script as i am not able to display those characters due to some restrictions.

If you have further queries then please mail to support@itnirvanas.comThursday, 29 January 2009

Informatica interview questions (Part 2)

We will continue with second set of questions

Q 1. What is the difference between Connected and Unconnected Lookups?

Ans : Connected Lookup

1.Connected Lookup Receives input values directly from the pipeline.

2.Connected Lookup You can use a dynamic or static cache.

3.Connected Lookup Cache includes all lookup columns used in the mapping

4.Connected Lookup Can return multiple columns from the same row or insert into the dynamic lookup cache.

5.Connected Lookup If there is no match for the lookup condition, the Informatica Server returns the default value for all output ports. If you configure dynamic caching, the Informatica Server inserts rows into the cache.

6.Connected Lookup Pass multiple output values to another transformation. Link lookup/output ports to another transformation.

7.Connected Lookup Supports user-defined default values

Unconnected Lookup

1.Unconnected Lookup Receives input values from the result of a :LKP expression in another transformation..

2.Unconnected Lookup You can use a static cache.

3.Unconnected Lookup Cache includes all lookup/output ports in the lookup condition and the lookup/return port.

4.Unconnected Lookup The dynamic lookup cache, Designate one return port (R). Returns one column from each row

5.Unconnected Lookup If there is no match for the lookup condition, the Informatica Server returns NULL.

6.Unconnected Lookup Pass one output value to another transformation. The lookup/output/return port passes the value to the transformation calling: LKP expression.

7.Unconnected Lookup Does not support user-defined default values

Q 2. Difference between Active and passive transformation?

Ans: Active transformation :Active transformation can change the no of records passing through it.Active transformations that might change the no of record are advance ext procedure, aggregator, filter, joiner, normalizer, rank, and update strategy, source qualifier

We can connect only 1 active transformation to the same transformation or target can connect any no of pass transformation. Advanced External Procedure/Aggregator/Application Source Qualifier/Filter/Jo iner/Normalizer/Rank/Router/Update Strategy

Passive transformation :Passive transformation can never change the no of records.

Ex. lookup, expression, external procedure, sequence generator, stored procedure

Expression/External Procedure/Mapplet- Input/Lookup/Sequence generator/XML Source Qualifier/Mapplet - Output

Q 3. What is an indicator file and how it can be used?

Ans: Indicator file is used for Event Based Scheduling when you don’t know when the Source Data is available., A shell command ,script or a batch file creates and send this indicator file to the directory local to the Informatica Server. Server waits for the indicator file to appear before running the session.

Q 4. Is lookup a Active transformation or Passive transformation?

Ans: Passive.

Q 5. What are the objects that you can’t use in a mapplet?

Ans :1. COBOL source definition

2. Joiner transformations

3.Normalizer transformations

4.Non reusable sequence generator transformations.

5.Pre or post session stored procedures

6.Target definitions

7.Power mart 3.5 style Look Up functions

8.XML source definitions

9.IBM MQ source definitions

Q6. What is incremental aggregation?

Ans: When using incremental aggregation, we apply capture d changes in the source to aggregate calculations in a session. If the source changes only incrementally and we can capture changes, we can configure the session to process only those changes. This allows the Informatica Server to update our target incrementally, rather than forcing it to process the entire source and recalculate the same calculations each time you we the session.

Q7. What is tracing level and what are the different types of tracing level?

Tracing level represents the amount of information that informatica server writes in a log file.

Types of tracing level

1.Normal

2.Verbose

3.Verbose init

4.Verbose data

Q8. How you will recover sessions?

Ans : If we stop a session or if an error causes a session to stop, refer to the session and error logs to determine the cause of failure. Correct the errors, and then complete the session. The method we use to complete the session depends on the properties of the mapping, session, and Informatica Server configuration.

Use one of the following methods to complete the session:

1. Run the session again if the Informatica Server has not issued a commit.

2. Truncate the target tables and run the session again if the session is not recoverable.

3. Consider performing recovery if the Informatica Server has issued at least one commit.

Q9. When to use Abort, Decode functions?

Ans: Abort can be used to Abort / stop the session on an error condition. If the primary key column contains NULL, and you need to stop the session from continuing then you may use ABORT function in the default value for the port. It can be used with IIF and DECODE function to Abort the session.

Q10 What is constraint based loading ?

Ans Constraint based loading. the data was loaded into the target table based on the Constraints.i.e if we want to load the EMP&DEPT data, first it loads the data of DEPT then EMP because DEPT is PARENT table EMP is CHILD table. In simple terms, it loads PARENT table first then CHILD table.

My ProfileTuesday, 27 January 2009

Informatica interview questions (Part 1)

Q.1 Flat file is having 10 records as input and I want to push 5 records to the target?

Ans : one variable with the name $$count at mapping variable with a Count aggregation typeand initial value for that $$count=0. Create one expression and use SETCOUNTVARIABLEv_count=SetCountVariable ($$Count) Create one filter after expression and put condition v_count <=5

Q2 What is difference between direct and indirect loading options in sessions?

Ans: Use file type direct when we are loading single file into Target. Use Indirect when we want to load multiple files through single session in the mapping

Q3 When we create a target as flat file and source as oracle. How can we have first rows as column names in flat files.

Ans :We can add a union all clause in the Source Qualifier of the Relational source to add the header values in SELECT clause, so as to populate them as header.

Q 4 How to capture the user information (Username) of the person, who is executing a workflow or session in workflow manager.

Ans : SELECT USER_NAME,WORKFLOW_NAME FROM REP_WFLOW_RUN;

Q 5 How to join two tables, which reside in two different databases in the Source qualifier.

Ans: By using DB links in SQ ,but throughput will be very slow. Joiner will be better approach in this case

Q. 6 What is a code page?

A. A code page contains encoding to specify characters in a set of one or more languages. The code page is selected based on source of the data. For example if source contains Japanese text then the code page should be selected to support Japanese text.

When a code page is chosen, the program or application for which the code page is set, refers to a specific set of data that describes the characters the application recognizes. This influences the way that application stores, receives, and sends character data.

Q 7. Is there any way to handle sequence generation over 2 billion records, As sequence generator can generate max 2 billion records

Ans

Method1

it will be done by unconnected lookup create lookup transformation on target just keep one field(id_field) and remove all remaing fields.create i/p field with same data type as out field(id_field) select return port for id_field in loook up override write a query as select nvl(max(id_field),0) from table and ' lookup condition is id_field>=in_field in exp transformation v_dummy(v)--->it always to be zero v_lkp_return iif(:lkp_target(v_dummy) ---here u can pass v_dummy value or just zero o_id_field(0).v_lkp_return+1 __.____._

Method 2

Onw way would be to use expression trans after sequence generator. Another way could be to use expression instead of sequence genearator. Here you will use variable of agg type of count to create sequences.

Q .8 What is difference between $ and $$ parameters/variables

Ans $ are System defined and $$ are User defined variables

Q 9.How to use data from SAP/Oracle Apps /Mainframe

Ans Through power exchange.

Q 10 How to find all invalid mappings in a folder

Ans we can find the invalid mappings information from the rep_all_mappings view with a query Select mapping_name from rep_all_mappings Where subject_area='Folder_Name' And parent_mapping_is_valid <>1 My Profile

Friday, 23 January 2009

Trigger Firing sequence in D2K (Oracle Forms)

This is most important thing to understand in Oracle D2K Forms When you open a form following triggers are executed

First Logon Triggers are fired

1.PRE-LOGON

2.ON-LOGON

3.POST-LOGON

After that Pre Triggers

4. PRE-FORM

5. PRE-BLOCK

6. PRE-TEXT

After that WHEN-NEW Triggers

7. WHEN-NEW-FORM-INSTANCE

8. WHEN-NEW-BLOCK-INSTANCE

9. WHEN-NEW-ITEM-INSTANCE

After that ITEM Level Triggers

After this focus is on the first item of the Block. If you type some data and press the tab key following trigger will fire in sequence

10.KEY-NEXT-ITEM (This trigger is present on the item level).

11.POST-CHANGE (This trigger is present on the item level).

12.WHEN-VALIDATE-ITEM (This trigger is present on the item level).

13.POST-TEXT-ITEM (This trigger is present on the item level).

14.WHEN-NEW-ITEM-INSTANCE (Block Level Trigger).

Now focus will go the next item present on the form.

If there are no further items present on the screen them if we enter data and press tab key then only KEY-NEXT-ITEM is fired. Now suppose we close the form then the item level triggers are fired.

POST TRIGGERS

15.POST-BLOCK

16.POST-FORM

Now the form will be closed. My Profile

Normalizer transformation (Working with VSAM source)

Normalizer transformation is used with COBOL sources, which are often stored in a denormalized format. The OCCURS statement in a COBOL file nests multiple records of information in a single record. We can use Normalizer transformation, to break out repeated data within a record into separate records. For each new record it creates, the Normalizer transformation generates a unique identifier.

Step 1: Create the Copybook for COBOL source

First Step is to get the copybook from Mainframe Team and convert that Informatica Compliant format

It will look like

Normally Highlighted section is provided by Mainframe team convert it into format required by format by adding line above that code (From identification to fd FNAME) and below that code (starting from working storage division). After changes save the file as .cbl file

Normally Highlighted section is provided by Mainframe team convert it into format required by format by adding line above that code (From identification to fd FNAME) and below that code (starting from working storage division). After changes save the file as .cbl file

Point to be taken care while editing .cbl File

You might get following error

identification division.

program-id. mead.

environment division.

select Error at line 6 : parse error

Things to be taken care of

1.Line Select FNAME should not start before column position 12

2.Other line which have been added above and below should not start before column 9

3.All the line in structure (Highlighted above) should end with Dot.

Once Cobol Source is imported successfully you can drag Normalizer source into mapping

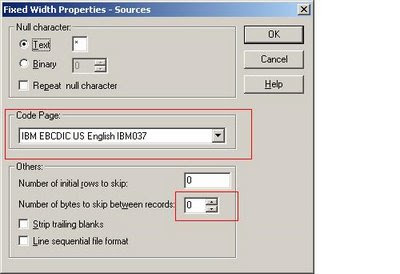

Step 2: Set Workflow Properties Properly for VSAM Source

One you have successfully imported the COBOL copybook then you can create your mapping using VSAM Source. After creating mapping you can create your workflow

Please take care of following properties in session containing VSAM source

In Source Advance File properties set the following options (Highlighted one)

Imp: Always ask for COBOL source file to be in Binary Format, Otherwise you will face lot of problems with COMP-3 Fields

Imp: Always ask for COBOL source file to be in Binary Format, Otherwise you will face lot of problems with COMP-3 FieldsOnce you have set these properties you can run your workflow.

COMP3 FIELDS:

COBOL Comp-3 is a binary field type that puts ("packs") two digits into each byte, using a notation called Binary Coded Decimal, or BCD. This halves the storage requirements compared to a character, or COBOL "display", field. Comp-3 is a common data type, even outside of COBOL

Common issues faced while working with Comp-3 Fields :

If you have created your created cobol source definition with comp-3 fields (Packed Data) but actual data in source file is not packed .So Make sure that in both the definition and source file date is in same format

Check whether COMP-3 fields are signed or unsigned My Profile

Tuesday, 20 January 2009

All About Oracle Synonym

Main use of synonym :

1. Hide Complexity : If you have to access a object owned by another database user then

You have to use syntax Owner.Objectname every time to use that object. Instead of using cumbersome syntax every time we can create synonym for that

Create Synonym Syn_Name for Owner.Objectname

Suppose you have to access Table Tab_Y owner by user User_Y .Before creating synonym you have to use

Select * from User_Y.Tab_Y

Now if we create a synonym

Create Synonym Tab_Y for User_Y.Tab_Y

After creating synonym you can use

Select * from Tab_Y

Note : The object does not need to exist at the time of its creation

2 .Hide Owner/Location : In some applications application designers do not want to reveal the owner and location of object to other user. To achieve this they create synonym

Type of synonym : There are primarily two type of synonym

1. Private : Only owner of synonym can use private synonym By default synonym created is private

Create Synonym Tab_Y for User_Y.Tab_Y .

2. Public : Everyone can use public synonym. Syntax for creating public synonym

Create Public Synonym Tab_Y for User_Y.Tab_Y .

Order of precedence for synonym :

There are lot of confusion when both object and synonym exist in database, which one will be used first. Following is order of precedence

1. Local objects will always be accessed first.

2. If a local object does not exist, the object with a private synonym will be accessed.

3. If a private synonym does not exist or the object does not exist, then the public synonym will be used.

Flip Side of using synonym :

1. There are security issues related with public synonym

2. If there are lot of synonym in database then database performance will degrade i.e. suppose user want to query Tab_A in above mentioned example database parser have to go across large set of synonym and have to put each synonym in library cache along with dependency.

Alternative to using synonyms:

Use logon trigger to set ALTER SESSION SET CURRENT_SCHEMA This will make default schema for unqualified object

create or replace trigger trg_at_logon on database after logonbegin

if user in ('X','Y') then execute immediate 'alter session set current_schema=’DFLT’;

end if

end;

now if user X,Y login their default schema for unqualified object will be DFLT

Misc point about synonym :

We cannot use Drop and truncate command with synonym

Removing Synonyms :

We can use following syntax to drop private synonym

Drop synonym syn_name

To drop public synonnynm

Drop Public synonym syn_name

We can also use force command

Drop synonym syn_name force

The force syntax will force Oracle to drop the synonym even if it has dependencies. It is not a good idea to use the force phrase as it can cause invalidation of Oracle objects.

Query to find Synonym use in database :

Suppose we want to object that reference synonynm test_syn

Select owner, name, type

From dba_dependencies

Where referenced_name =

'TEST_SYN'

and referenced_type =

'SYNONYM'

Common Error with Synonym :

One of the most common error encountered with synonynm is

"ORA-00980: synonym translation is no longer valid"

Main causes for this to happen are

1. One has created a synonym on non-existing object by mistake.

2. You dropped an object but did not drop the synonyms which are referencing the object.

My Profile

Friday, 16 January 2009

New features of Informatica 8

- Target from Transformation :In Infa 8 we can create target from transformation by dragging transformation in Target designer

- Pushdown optimization : Uses increased performance by pushing transformation logic to the database by analyzing the transformations and issuing SQL statements to sources and targets. Only processes any transformation logic that it cannot push to the database.

- New function in expression editor :New function have been introduced in informatica 8 like reg_extract and reg_match

- Repository query available in both versioned and non versioned repositories previously it was available only for versioned repository

- UDF (User defined function) similar to macro in excel

- FTP :We can have partitioned FTP targets and Indirect FTP filesource(with file list).

- Propagating Port Descriptions : In Infa 8 we can edit a port description and propagate the description to other transformations in the mapping.

- Environment SQL Enhancements : Environment SQL can still be used to execute an SQL statement at start of connection to the database. We can Use SQL commands that depend upon a transaction being opened during the entire read or write process. For example, the following SQL command modifies how the session handles characters: Alter session set NLS_DATE_FORMAT='DD/MM/YYYY';".

- Concurrently write to multiple files in a session with partitioned targets.

- Flat File Enhancements :

- Reduced conversion of data types

- Delimited file performance has improved

- Flat file now can have integer and double data types

- Data can be appended to existing flat files

Thursday, 15 January 2009

Inline view

An inline view is term given to sub query in FROM clause of query which can be used as table. Inline view effectively is a named sub query

Ex : Select Tab1.col1,Tab1.col.2,Inview.col1,Inview.Col2

From Tab1, (Select statement) Inview

Where Tab1.col1=Inview.col1

SELECT DNAME, ENAME, SAL FROM EMP ,

(SELECT DNAME, DEPTNO FROM DEPT) D

WHERE A.DEPTNO = B.DEPTNO

In the above query (SELECT DNAME, DEPTNO FROM DEPT) D is the inline view.

Inline views are determined at runtime, and in contrast to normal view they are not stored in the data dictionary,

There are lot of instances when we want data from sub query to available in main query, before oracle 7.3 only option was to create view and use that in sub query

Disadvantage of using this is

1.Separate view need to be created which is an overhead

2.Extra time taken in parsing of view

This problem is solved by inline view by using select statement in sub query and using that as table.

Advantage of using inline views:

1. Better query performance

2. Better visibility of code

Practical use of Inline views:

1. Joining Grouped data with Non grouped data

2. Getting data to use in another query

I wll further add in this post tommorow with practical example of inline views. My Profile

Monday, 12 January 2009

Star Vs Snowflake Schema

In dimension modeling there are mainly two types of schemas

1.Star Schema

2.Snowflake Schema

Star Schema :

Star schema is simplest data warehouse schema .It is called star schema because ER diagram of this schema looks like star with points originating from center. Center of star schema consists of large fact table and points of star are dimensional table.

Star schema is identified by one or more large fact table at center that contain primary information in data warehouse and lot of small dimensional tables each of which contain information about particular attribute of fact tables.

Advantage of Star Schema :

1.Provide a direct mapping between the business entities and the schema design.

2.Provide highly optimized performance for star queries.

3.It is widely supported by a lot of business intelligence tools.

Disadvantage of Star Schema:

There are some requirement which can not be meet by star schema like relationship between customer and bank account can not represented purely as star schema as relationship between them is many to many.

Snowflake is bit more complex than star schema. It is called snow flake schema because diagram of snowflake schema resembles snowflake.

In snowflake schema tables are normalized to remove redundancy. In snowflake dimension tables are broken into multiple dimension tables, for example product table is broken into tables product and sub product.

Snowflake schema is designed for flexible querying across more complex dimensions and relationship. It is suitable for many to many and one to many relationship between dimension levels.

Advantage of Snowflake Schema:

1.It provides greater flexibility in interrelationship between dimension levels and components.

2.No redundancy so it is easier to maintain.

Disadvantage of Snowflake Schema :

1.There are More complex queries and hence difficult to understand

2.More tables more joins so more query execution time.

Saturday, 10 January 2009

Dimensional Data Modeling

It is a modeling technique used in data warehousing systems. It is different from ER modeling technique used in OLTP systems. In Dimensional modeling a model of tables is combined together with aim of optimized query performance in Decision Support systems in relational databases.

Before further going into details of dimensional data modeling we should be aware of key concepts in Dimensional Data Modeling

Dimension : It can be considered as category of information. For example geographic dimension. It provides a way to slice and dice the data in data warehouse.

Attribute : Attribute can be considered as characteristic of dimension. For example region is attribute of geographic dimension. Dimension attribute is column in dimension table.

Hierarchy : It represents relationships between attributes of dimension. It defines different level with in dimension, In case of geographic dimension it is like

Continent ->Country –> Region –> State ->City ->Street

Fact Table : It represents the measure of interest, for organization product revenue is measure of interest. The level of granularity is an important factor in designing fact tables. For example it can be revenue by continent or revenue by country. In this example fact table will have three columns Product, Geographical region, Revenue.

Lookup Table: The look up table represents a dimension and constitutes attributes for dimension. Lookup table for product will consist of all products available.

Dimensional Modelling Vs ER Modelling :

In Dimensional Modelling emphasis is on optimising decision support query performance,On other hand ER Model are focussed on

- Removing redundancy in data model

- Optimse OLTP Performance

- Focus is on retrieval of single record

We will continue with dimensional data modelling in next post.

My ProfileWednesday, 7 January 2009

High Water Mark (Oracle)

High-water mark indicates the point up to which table or index has ever contained data.Let me explain .Suppose we create an empty table,the high-water mark would be at the start of the table segment

Now if we insert some data into the table segment, the HWM moves and it will be at position up to which the data is in the segment

Now if we insert some data into the table segment, the HWM moves and it will be at position up to which the data is in the segment If insert more data in the table segment, HWM moves further to point the position up to which the data is in the segment

If insert more data in the table segment, HWM moves further to point the position up to which the data is in the segment As we can see above on deleting the data, HWM does not move. The drawback of this is that oracle always read the blocks up to high water mark in case of full table scan. You must have noticed that doing a count (*) on empty table, takes time to show you no rows. The reason for taking so much time is setting of HWM at higher position.

As we can see above on deleting the data, HWM does not move. The drawback of this is that oracle always read the blocks up to high water mark in case of full table scan. You must have noticed that doing a count (*) on empty table, takes time to show you no rows. The reason for taking so much time is setting of HWM at higher position.Now you must be thinking , how to set the high-water mark at lower position ?

The only method to set the HWM is to truncate a table.

Let us see how truncate set the HWM.

Monday, 5 January 2009

All About Oracle Sequences

Oracle sequence is a database object that is used to generate unique numbers,It is mainly used for primary key values.

Syntax

Syntax for creating sequence is

Important option need to be considered

CYCLE/NOCYCLE:If we specify cycle option while creating sequence then it will continue to generate values even after reaching it's max value. It will again start with min value. Nocycle means it will not generate value after reaching max value. Default is no cycle.

CACHE/NOCACHE : It specifies how many value oracle pre-assign and keep in memory for fast access. Nocache specifies that no value is pre-assigned. If we don’t use any option then default value is 20.

Flip side of using a sequence with cache is that if some system failure occurs then all cached values, which were not used, will be lost. For example you were using a sequence, which was, having cached value equals to 100 and oracle had used only 30 values then you will lose 70 sequence values.

Things to remember while using sequences

1. In a session sequence.nextval must be referred before using sequence.currval .if you try to use current value before using nextval in session you will get following error ORA-00904: "SEQ_TEST"."CURRENTVAL": invalid identifier.

2. In other case suppose you have insert 20 records with sequence the issue rollback then sequence will not be rolled back. Next time oracle will use 21st value. Oracle has wisely chosen this option so that multiple users can use sequence without any concern of duplicate sequence value.

3. Creating sequences with NOCACHE will have performance impact.

4. We can not use sequence when the UNION operator combines SELECT statements.

Enhancement in 11G for sequences

In 11G we can use variable assignment for sequences like

V_seq_11g := test_SEQ_11G.NEXTVAL;

For doing same in 10G and prior we have to use SELECT TEST_SEQ_10G.NEXTVALINTO v_seq_10g FROM DUAL;